Load ULog and PX4 files in Python

PX4 is the leading software standard for drones and unmanned aerial vehicles (UAVs). PX4 provides a platform for controlling autonomous flight and navigation systems.

ULog is a binary log file format used by the PX4 autopilot system for recording flight data. It captures various flight parameters such as sensor readings, GPS data, battery status, and actuator outputs.

In Nominal, ULog data is used for post-flight analysis, debugging, and performance benchmarks. This guide uses flight logs from the PX4 community hub to demonstrate an automated pipeline for ingesting ULog files into Nominal.

Download flight logs

For convenience, Nominal hosts sample test data on Hugging Face. To download the sample data for this guide, copy-paste the snippet below.

(Make sure to first install huggingface_hub with pip3 install huggingface_hub).

Convert ULog to CSV

Install pyulog: pip install pyulog.

It provides ulog2csv, which converts the PX4 ULog file to a folder of CSV logs:

List all CSV flight logs

Depending on the flight, the ULog-to-CSV converter will output ~50 CSV flight logs. To get an idea of what these log files are, we’ll print each of their file names.

~50 CSV flight log names are printed.

Inspect PX4 GPS log

Let’s inspect a single log file (..log_vehicle_gps_position_0.csv). Note that the timestamp column is common across log files and always in relative microseconds, meaning microseconds from the start of the flight or whenever the sensor began recording.

Let’s plot the recorded latitude vs longitude from the GPS sensor.

Upload a single PX4 GPS log file

Connect to Nominal

Concepts

- Base URL: The URL through which the Nominal API is accessed (typically

https://api.gov.nominal.io/api). - Workspace: A mechanism by which to isolate datasets; each user has one or more workspace, and data in one cannot be seen from another. Note that one token may access multiple workspaces.

- Profile: A combination of base URL, API key, and workspace.

There are two primary ways of authenticating the Nominal Client. The first is to use a profile stored on disk, and the second is to use a token directly.

Storing credentials to disk

Run the following in a terminal and follow on-screen prompts to set up a connection profile:

Here, “default” can be any name chosen to represent this profile (reminder: a profile represents a base URL, API key, and workspace).

The profile will be stored in ~/.config/nominal/config.yml, and can then be used to create a client:

If you have previously used nom to store credentials, prior to the availability of profiles, you will need to migrate your old configuration file (~/.nominal.yml) to the new format (~/.config/nominal/config.yml).

You can do this with the following command:

Directly using credentials in your scripts

NOTE: you should never share your Nominal API key with anyone. We therefore recommend that you not save it in your code and/or scripts.

Upload to Nominal

After upload, navigate to Nominal’s Datasets page (login required). You’ll see your CSV at the top!

Create a PX4 log lookup table

The script below creates a dataframe with each log file’s path, start time, and end time.

Some log files don’t have valid start and end times - we’ll remove these rows from the dataframe.

Finally, we’ll plot all of the log start and end times to identify any outliers.

Each log’s start and end times vary slightly but are generally uniform. No sensors started mid-flight or stopped long after landing.

Extract absolute flight time

To get an absolute flight start time, we’ll use the time_utc_usec column from the GPS sensor log file.

Create PX4 log run

In Nominal, Runs are containers of multimodal test data - including Datasets, Videos, Logs, and database connections.

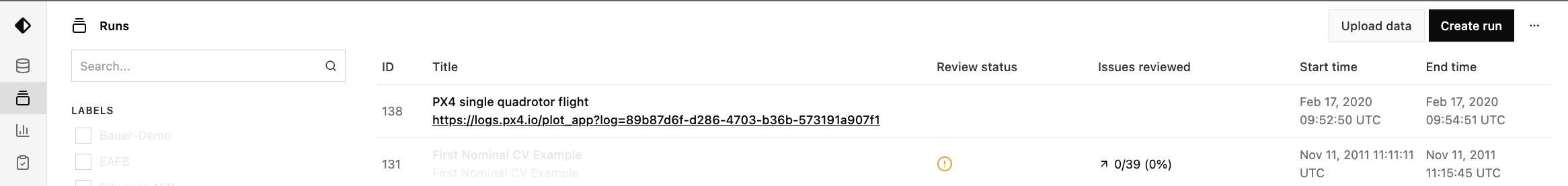

To see your organization’s latest Runs, head over to the Runs page

We’ll use the create_run() routine to create a Run with the flight start and end times that we identified above.

If you head over to the Runs page on Nominal (login required), you’ll see the “PX4 single quadrotor flight” at the top:

Bulk upload all PX4 logs

To upload all ~50 PX4 log files to the quadrotor run, we’ll iterate through the lookup table that we created and validated above.

There may multiple CSV files associated with each log category, e.g. _battery_status_0.csv and _battery_status_1.csv. We combine these into a single battery_status.csv file.

For each category, we create a dataset using client.create_dataset, and then use

Dataset.add_tabular_data() to upload related CSV files. Then, we’ll use Run.add_dataset() to associate the dataset with the run.

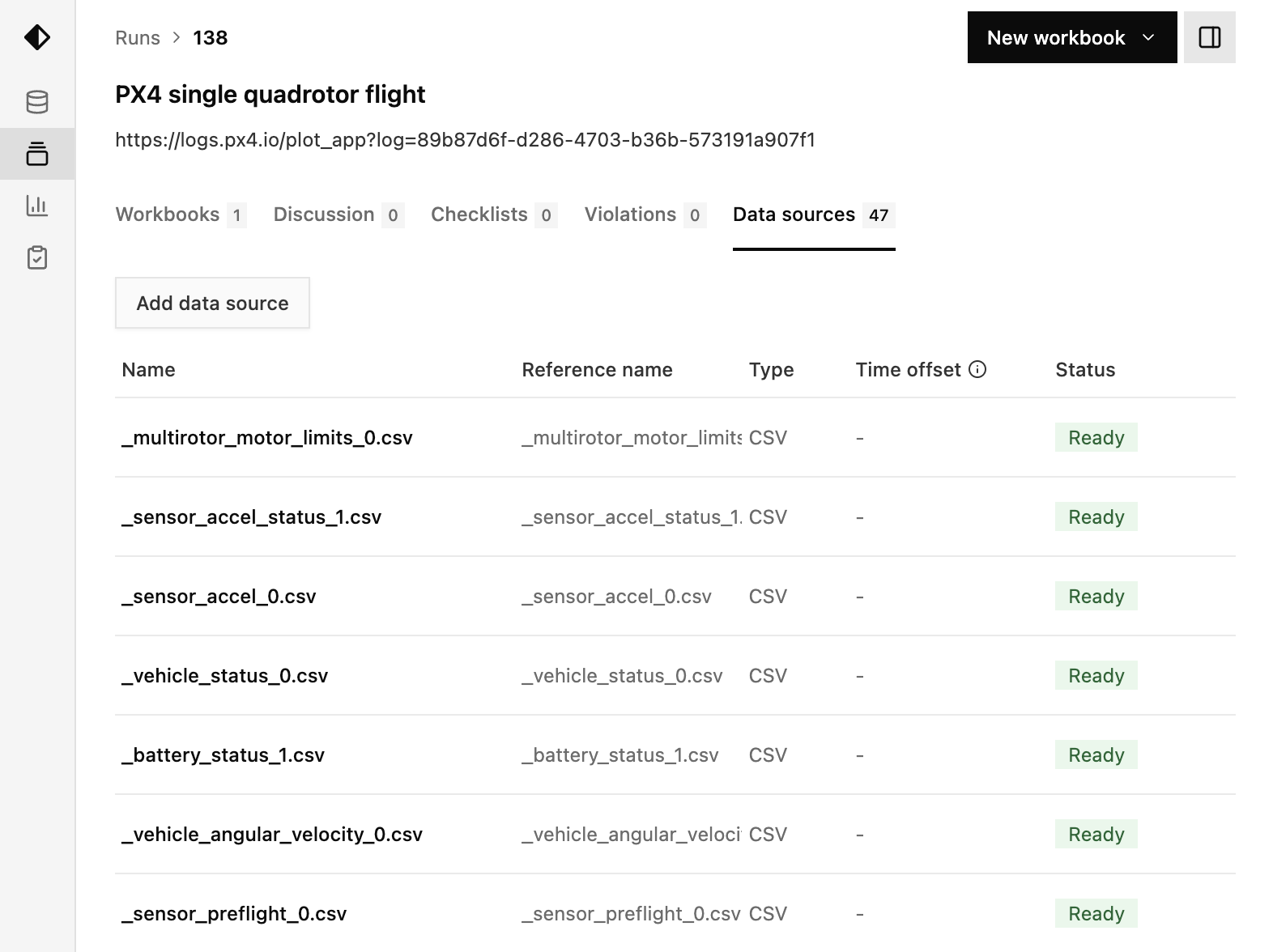

On Nominal, navigate from the Runs page to “PX4 single quadrotor flight”. In the “Data scopes” tab, you should see ~40 datasets.

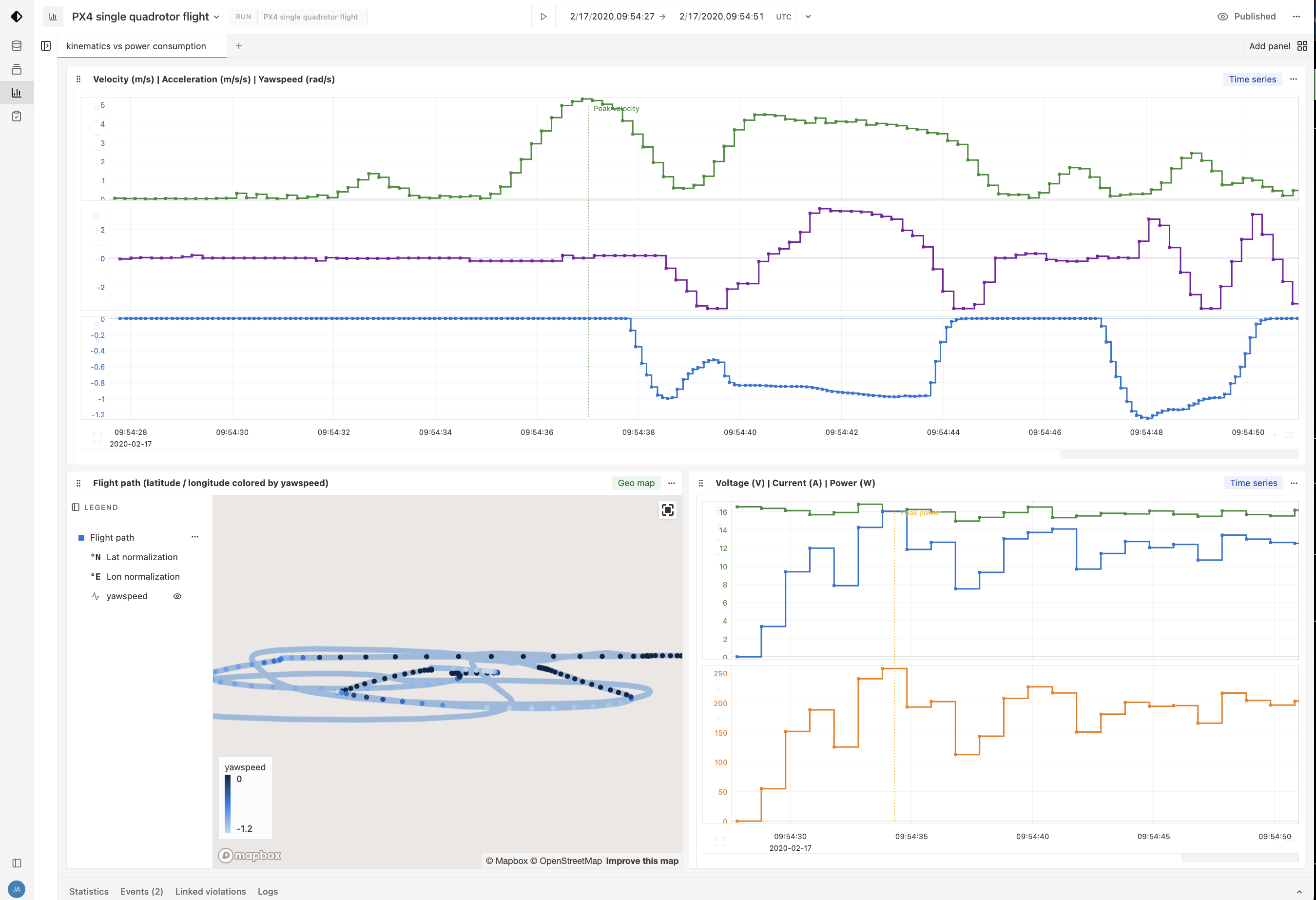

Create a workbook

Now that all of the flight data is organized as a test Run on Nominal, it can be collaboratively visualized, analyzed, and benchmarked as a reference for future flights. See Creating a workbook for more information.

Appendix

Inspect ULog metadata

Run the ulog_info command to extract high-level log file parameters such as the flight computer RTOS and version.